I’m Anika Tabassum, currently working as a machine learning research scientist at Oak Ridge National Labpratory, where I develop deep Learning models for multi-scale and multimodal scientifc data mostly relevant to time-series and image data collected and experimented by battery, fusion energy, and material scientists. My interest broadly lies in foundational and generative models, multimodal learning for scientific discovery. I have explored vision transformer, reinforcement learning, foundational image segmentation models, LLM time-series architecture, and diffusion models.

I received my PhD from Virginia Tech with my advisor Prof. B. Aditya Prakash, where I worked on bringing domain-guided ML to address multiple challenges to prepare and mitigate power system failures and disaster vulnerabilities. My Ph.D. research work was funded by NSF fellowship and I also worked as an affiliated graduate researcher at Georgia Tech.

Our team DeepCOVID won the 1st prize in designing the COVID-19 forecasting model for the Facebook-CDC epidemic foecasting data challenge in 2021. I have been selected as Rising Star UT Austin in 2023 and an outstanding postdoctoral researcher at ORNL in 2022.

I'm best reached via email and always open to collaboration. Here is my resume.

Latest News

Selected Projects

LLM Enhanced Graph Neural Network

We aim to buid concept-concept relations multimodal embeddings across different disciplines. As part of this project, our aim is to enhance multimodal embeddings through LLM, molecular graph embeddings, and embeddings of chemical formulae using graph neural networks that leverage the relations among these embeddings as graphs. The research questions we seek:

- Can LLM improve a GNN from different data modalities?

- 2. Do graphs along with LLMs provide a better understanding of concept-concept relations more than just the embeddings from the LLM?

- 3. Are there any advantages of augmenting chemical formula and molecular graph structures over using LLM?

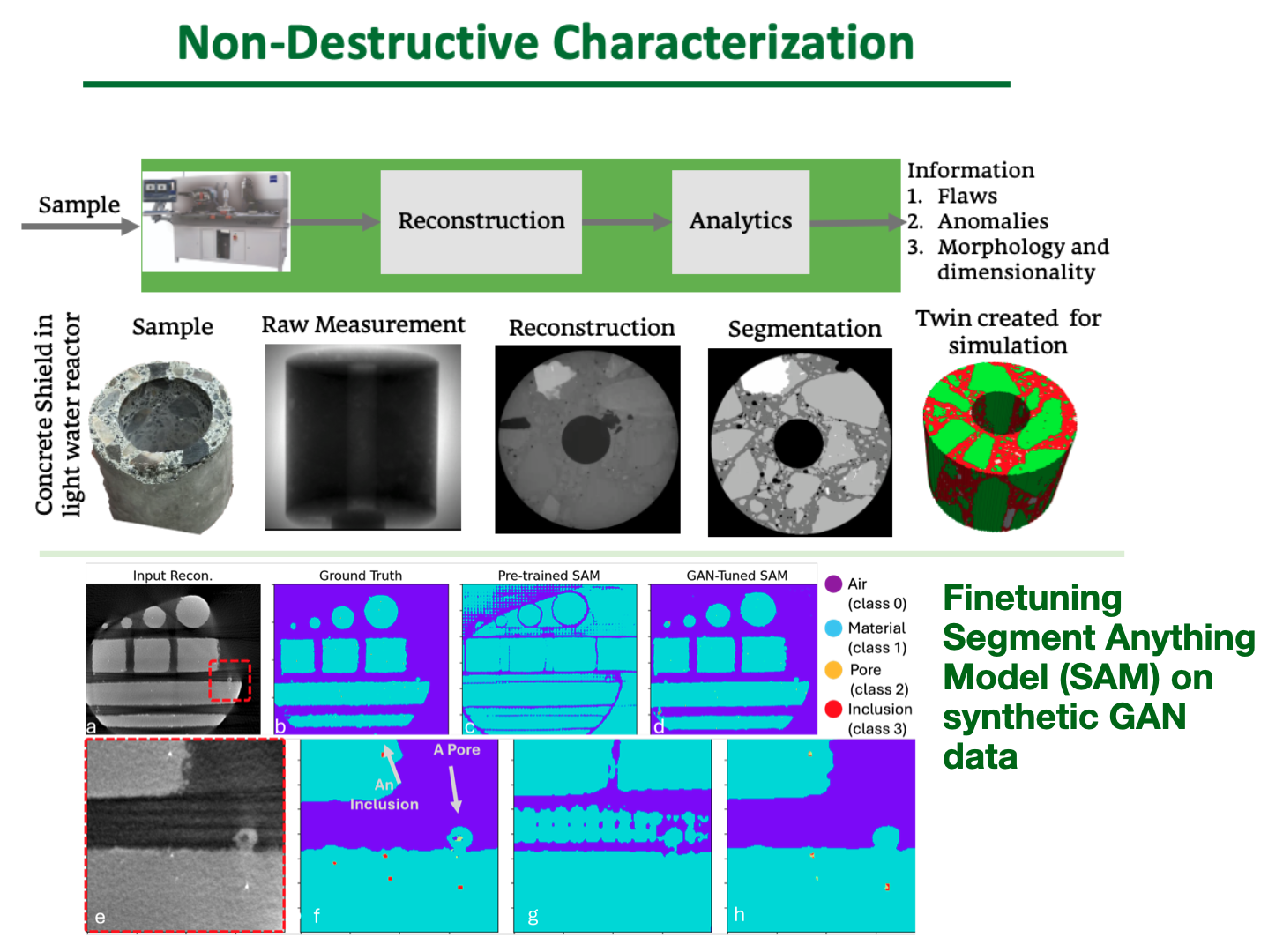

Segment Anything (SAM) for Scientific Imaging

Collaborator: Multiscale Sensor Analytics

Non-destructive chaacterization (NDC) is critical for understanding the processing, microstructure, and material properties and correlating them with the performance of materials without causing any damage to the sample under study. This leads to a few challenges:

- CT–scan images need post-processing (segmentation models).

- State-of-the-arts (SOTA) models are costly due to the need for human annotation.

- Few-labeled samples degrades model performance (leads to data paucity)..

code | paper | slides

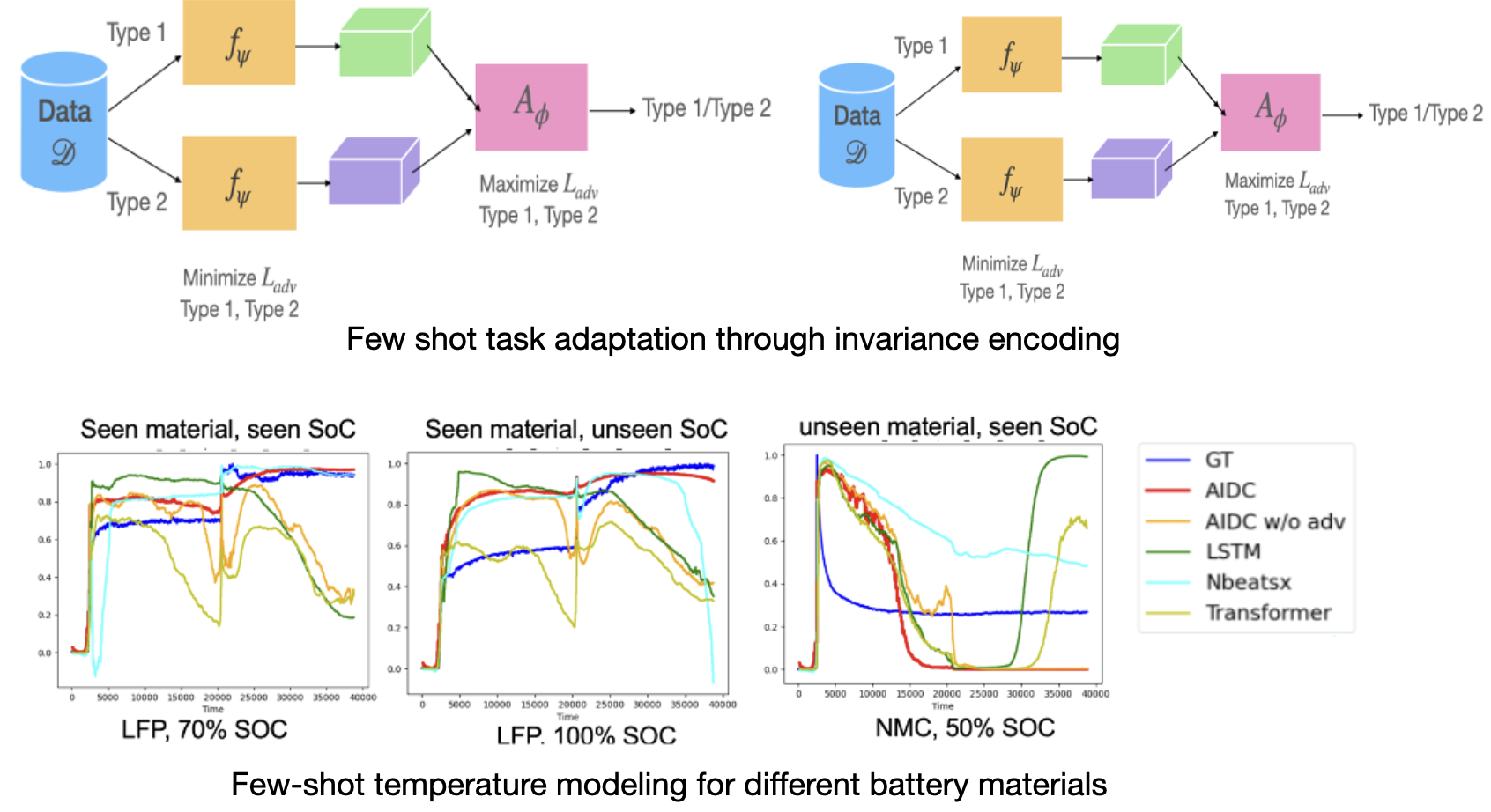

Few Shot Task Adaptation for Battery Safety Modeling

Collaborator: Stevens Institute of Technology

Model and early detect Thermal runaways and short circuit events that pose a major safety risk in Li-ion batteries by developing an Invariant Encoding architecture to to model thermal runaways as few shot generalization for any battery properties (capacity, material), etc.

- Develop a few-shot task adapted forecasting model under different battery conditions (battery material, capacity, voltage, etc.).

- Propose a novel invariant encoding architecture to counter data paucity.

- Demonstrate model performance to successfully capture thermal runaway events for seen and unseen battery conditions.

Causality through Attention for Neuromorphic Simulator

Collaborator: University of Washington

This project explores the potential of the transformer models for learning Granger causality in networks with complex nonlinear dynamics at every node, as in neurobiological and biophysical networks.

Our study primarily focuses on a proof-of-concept investigation based on simulated neural dynamics, for which the ground-truth causality is known through the underlying connectivity matrix. For transformer models trained to forecast neuronal population dynamics, we show that the cross-attention module effectively captures the causal relationship among neurons, with an accuracy equal or superior to that for the most popular Granger causality discovery method.

paper | code | slides

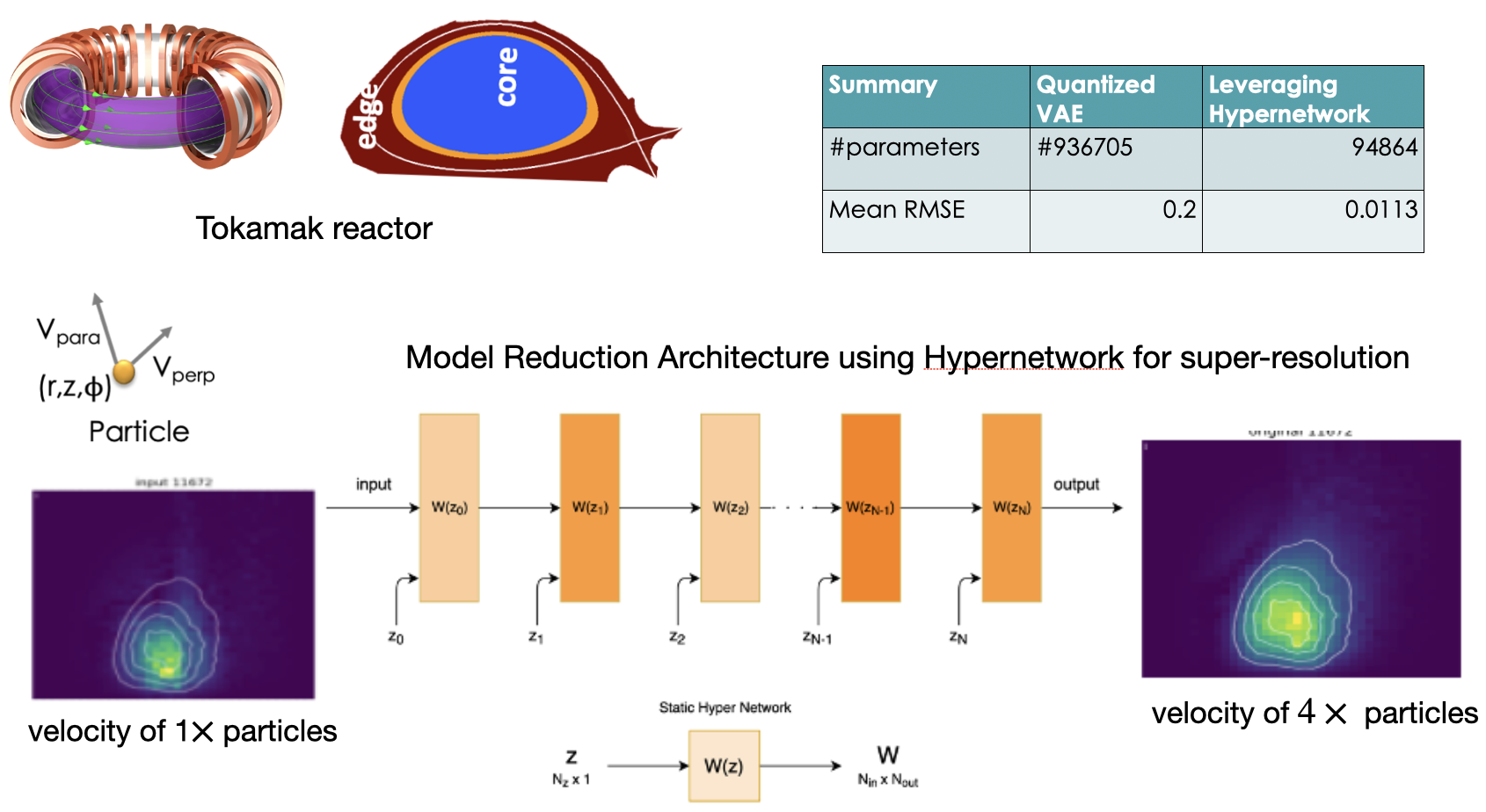

Particle Super Resolution for Fusion Energy

Collaborator: Princeton Plasma Physics Laboratory

Developing high-fidelity and high-resolution scientific data is a very challenging problem due to physics dynamics. The existing techniques for creating high-fidelity scientific data also take longer computational time and resources.

We analyze the performance of popular deep-learning-based data reduction models for inferring high-fidelity scientific data from low-fidelity data.

As a part of the preliminary approach, we develop a spatially-aware hypernetwork model to learn the global properties along with the local properties of the data. For the analysis, we leverage XGCplasma simulation particle density data for fusion energy. Our goal for hyper network is to data reduction while the model can generalize to super-resolve particles across core, edge, and boundary.

paper | code | slides

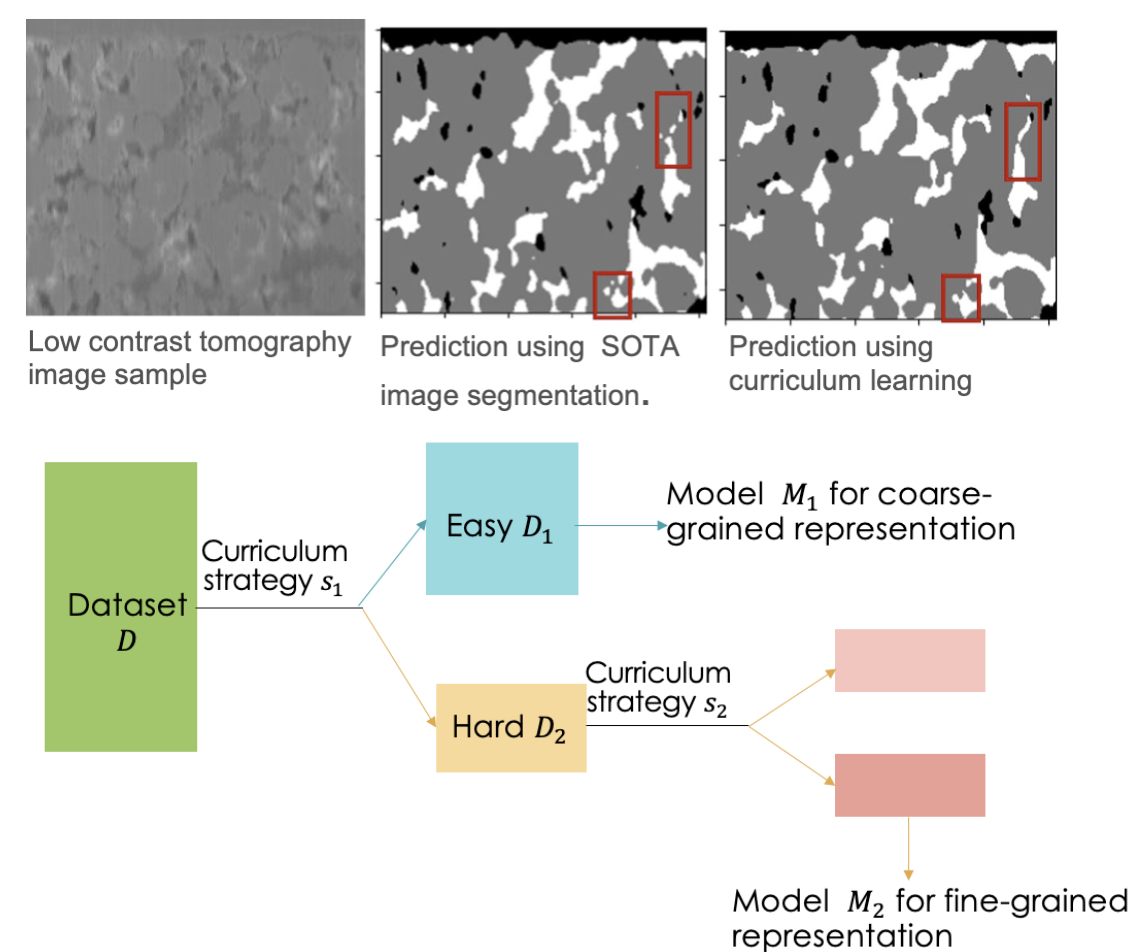

Curriculum Learning-based Noisy CT Image Segmentation

Collaborator: Stevens Institute of Technology

The goal of this project is to predict complex material boundaies of electrodes from low contrast images of X-ray computed tomography. Such X-The images collected from material samples are often noisy and suffer from poor resolution.

- Propose curriculum learning for solving complex image segmentation problem.

- Introduce a hierarchical curriculum learning technique to predict material phase boundaries.

- Our model can improve performance over noisy artifacts upto 8.5%.

- Our model can help battery researchers understanding material distribution, towards 3D reconstuction of an electrode.